JMETER

Apache JMeter is a 100% pure Java Desktop application designed to load-test functional behavior and measure performance. It was originally designed for testing Web Applications but has since expanded to other test functions. JMeter can be used as a unit test tool for JDBC database connections, FTP, LDAP, Web services and JMS.

If you are not aware of the basics related to JMETER then please refer to the following link for 10 min before reading this complete article.

http://jmeter.apache.org/usermanual/build-web-test-plan.html

The JMETER tool looks difficult to understand quickly since the new user does not have the basic knowledge to create a load test environment. This article will concentrate only on one of the best ways to record a web application using Workbench (file provided in code files) and run load tests in a similar way, like fro example QTP or Visual Studio - CodedUI.

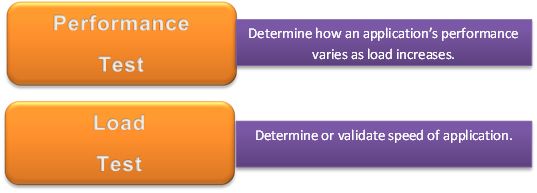

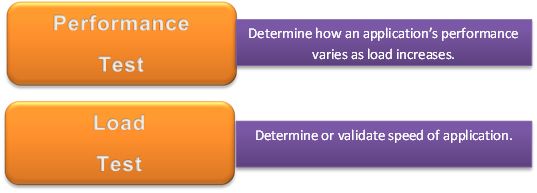

Difference between performance and load testing

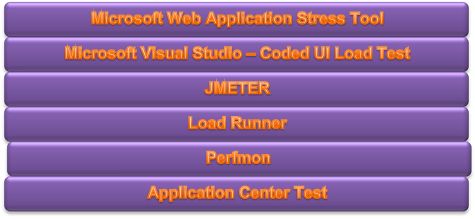

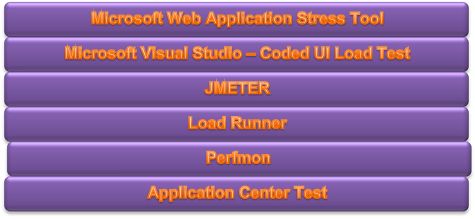

Load Testing Tools

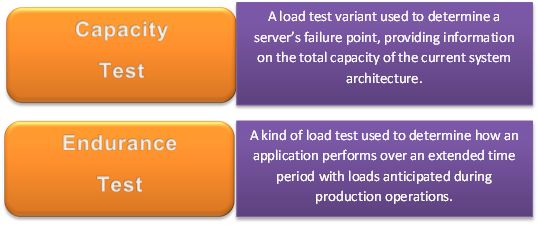

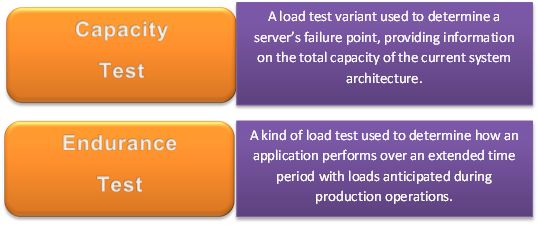

Various types of load test variants

Example of Requirement of load testing:

Before we dive into the step-by-step instructions, it's a good idea to define the terms and ensure the definition is clear.

Master: the system running Jmeter GUI, that controls the test

Slave: the system running jmeter-server, that takes commands from the GUI and send requests to the target system(s)

Target: the webserver we plan to stress test

Note: Starting the Test

Before start load testing, if you want to double check that the slave systems are working then open jmeter.log in Notepad. You should see the following in the log. Jmeter.engine.RemoteJMeterEngineImpl: Starting backing engine. If you do not see this message then it means the JMETER server did not start correctly.

Apache JMeter is a 100% pure Java Desktop application designed to load-test functional behavior and measure performance. It was originally designed for testing Web Applications but has since expanded to other test functions. JMeter can be used as a unit test tool for JDBC database connections, FTP, LDAP, Web services and JMS.

If you are not aware of the basics related to JMETER then please refer to the following link for 10 min before reading this complete article.

http://jmeter.apache.org/usermanual/build-web-test-plan.html

The JMETER tool looks difficult to understand quickly since the new user does not have the basic knowledge to create a load test environment. This article will concentrate only on one of the best ways to record a web application using Workbench (file provided in code files) and run load tests in a similar way, like fro example QTP or Visual Studio - CodedUI.

Difference between performance and load testing

Load Testing Tools

Various types of load test variants

Example of Requirement of load testing:

- Given a load of 50 requests per second for this URL and no other requests

- Within database CPU utilization and under 20% and Web Server CPU utilization of under 10%

- To check the performance of the web page the average value of receiving the page is an important parameter to check

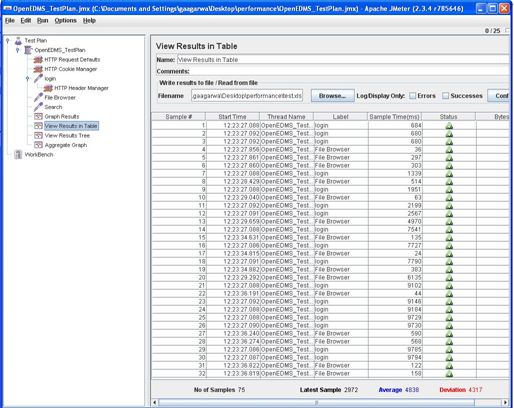

- Samples: A sample means one sampler call. One request to a web page in our case. So the value of 51 means a total of 51 web page requests to the page, for example "http://www.google.com", were made by JMeter

- Average: This value is the average time taken to receive the web pages. There were 51 values of receiving time that were added and divided by 51 and this value is arrived by JMeter. This value is a measure of performance of this web page. This means on an average 334 milliseconds is required to receive this web page for our network conditions.

- Min and Max: These are the minimum and maximum values of time required for receiving the web page.

- Std. Dev: This shows how many exceptional cases were found that were deviating from the average value of the receiving time. The lesser this value more consistent the time pattern is assumed.

- Error %: This value indicated the percentage of error. For example 51 calls were made and all are received successfully this means 0 error. If there are any calls not received properly they are counted as errors and the percentage of error occurrence against the actual calls made is displayed in this value.

Before we dive into the step-by-step instructions, it's a good idea to define the terms and ensure the definition is clear.

Master: the system running Jmeter GUI, that controls the test

Slave: the system running jmeter-server, that takes commands from the GUI and send requests to the target system(s)

Target: the webserver we plan to stress test

Note: Starting the Test

Before start load testing, if you want to double check that the slave systems are working then open jmeter.log in Notepad. You should see the following in the log. Jmeter.engine.RemoteJMeterEngineImpl: Starting backing engine. If you do not see this message then it means the JMETER server did not start correctly.

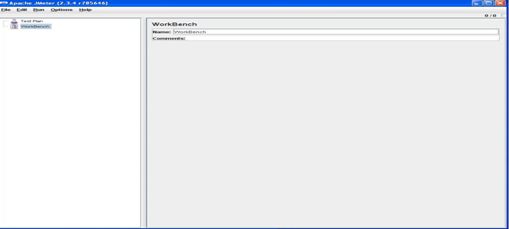

- Download JMETER binary release from http://jmeter.apache.org/download_jmeter.cgi

- Extract the zip file and go to bin folder.

- Double-click "jmeter.bat"

- This will start the JMETER application and the following screen will be displayed:

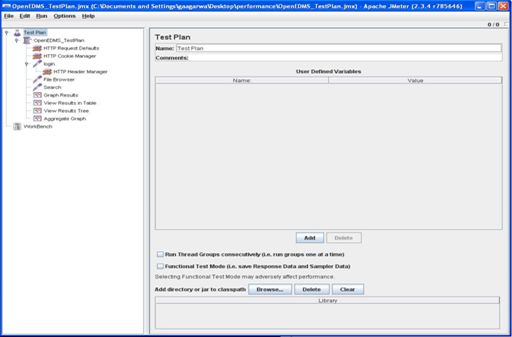

Part 1: How to record your web applications using Workbench for load test.

As mentioned above, JMETER looks like a difficult tool to understand quickly, since the new user does not have the basic knowledge to create a load test environment. The following provides one of the best ways to record the web application using Workbench and run load tests like for example QTP or Visual Studio - CodedUI.

- Go to File Open.

- Browse the sample test plan attached with this article for TestPlan2.jmx.jmx or Sample.jmx and click open.

- This will open the screen as shown below:

- Click on TestPlan2 on the left panel and edit the field "Number of Threads(users)": 25 on the right panel. Provide the desired figure with which testing is required to be conducted.

- Click on "HttpRequestDefaults" on the left panel and edit the Server Name or IP : 152.425.306.120 and Port Number:8080 (if the Tomcat Server is running on port other than 8080) on the right panel. Do not give the Server Name as "localhost". The field should contain the machine name or IP.

- There are already 3 http requests created. In other words Login, File Browser and Search. You can run the test plan to test performance for these 3 requests. You can also add new requests to test against specific criteria. This is discussed later on.

- In order to run the test plan, go to Run Start. Please ensure the Tomcat Server is running.

- This will start executing the test plan.

- Once the test plan execution is complete, you can see the result in various formats as explained below.

- Click

on Graph Results on the right panel. This displays the time taken for

each request by plotting the requests on the x-y axis.

- Click on "View Results" in the table to see the results in tabular format

- Click on View Results Tree to view request and response data for each request.

- Click on "Aggregate Graph". Then click on "Display Graph" on the right panel. This will render the results in a graphical view.

- In order to create your own request for a specific search criteria, a proxy server needs to be set up that will record the request to be tested.

- Right-click on WorkBench and select "Merge". Browse through the file "WorkBench.jmx" and click on "Open".

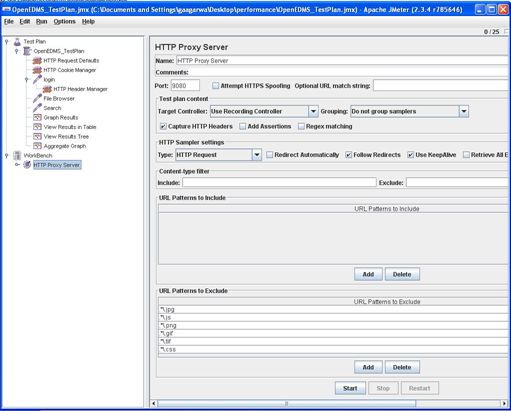

- Click

on "Http Proxy Server" on the left panel. By default, the proxy server

is configured to run at 9080 port. If you want to run on a different

port then edit the Port field on the right panel and then click on the

Start button present towards the end. This will start the proxy server.

- Now open Internet Explorer and login into OpenEDMS. Again ensure you access the application with server name or IP and not localhost.

- Click on the "Advance Search Tab".

- In order to start recording the search, the proxy server needs to be set up in IE.

- Go to "Tools" | "Internet Options" | "Connections LAN Settings"

- Tick the checkbox "Use a proxy server for your LAN" and provide the address and port as per the configuration done previously.

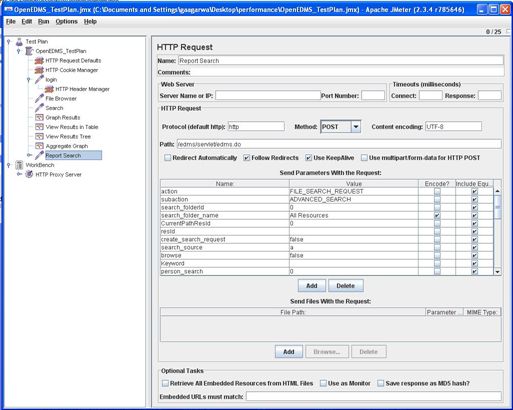

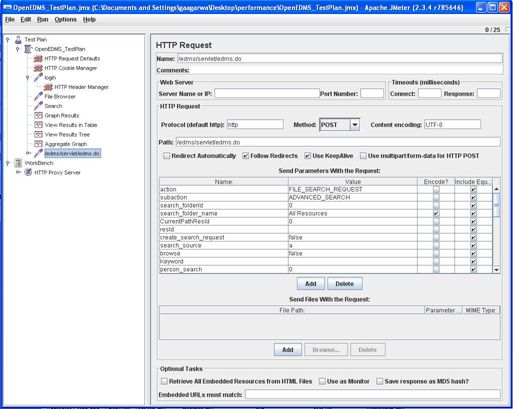

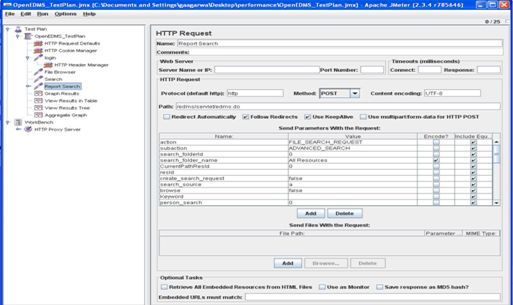

- Now as soon as you click on "Search" after filling the search criteria, a new request will be added in JMeter as shown.

- You

can provide some meaningful name instead of "/edms/servlet/edms.do" for

example Report Search in the Name field on the right panel

- Now drag on the Report Search item on the left panel and place it above the Graph Results as shown below.

- This way you can create your own search criteria to be tested. Now follow the same procedure to run the test as was mentioned earlier and view the results in desired formats.